- April 19, 2025

-

-

Loading

Loading

As a child of the ’80s growing up during the popularity of the two “Terminator” movies — stories built around the dystopian theme that learning machines become self-aware and start a war to exterminate humans — the idea of artificial intelligence is a bit unsettling.

I know I’m not in the minority here. The spectrum of fear surrounding AI ranges from general uneasiness with having computers specifically programmed to emulate human beings to doomsday scenarios of all of us losing our jobs and becoming enslaved to our computer overlords.

I don’t know what the future of AI looks like. And, from a two-day summit I attended this past week on AI, Ethics and Journalism put together by the Poynter Institute and hosted by the Associated Press in New York City, I can confidently say: No one else does, either.

But here’s one thing I do know: It’s here, whether we like it or not.

Even if you don’t realize it, you’re most likely using it daily.

Aside from the platforms set up to use AI via prompts from humans, such as ChatGPT and Google Gemini, companies have been baking AI into their technology in more subtle ways you may not have noticed.

For example, ever been writing an email and it starts suggesting how you can finish your sentence? That’s AI. Ever used a chat feature with specific prompts during a customer service call or online session? More AI. Ever search for something in Google and a paragraph pops up at the top of the results with an overview answering your question? 100% AI.

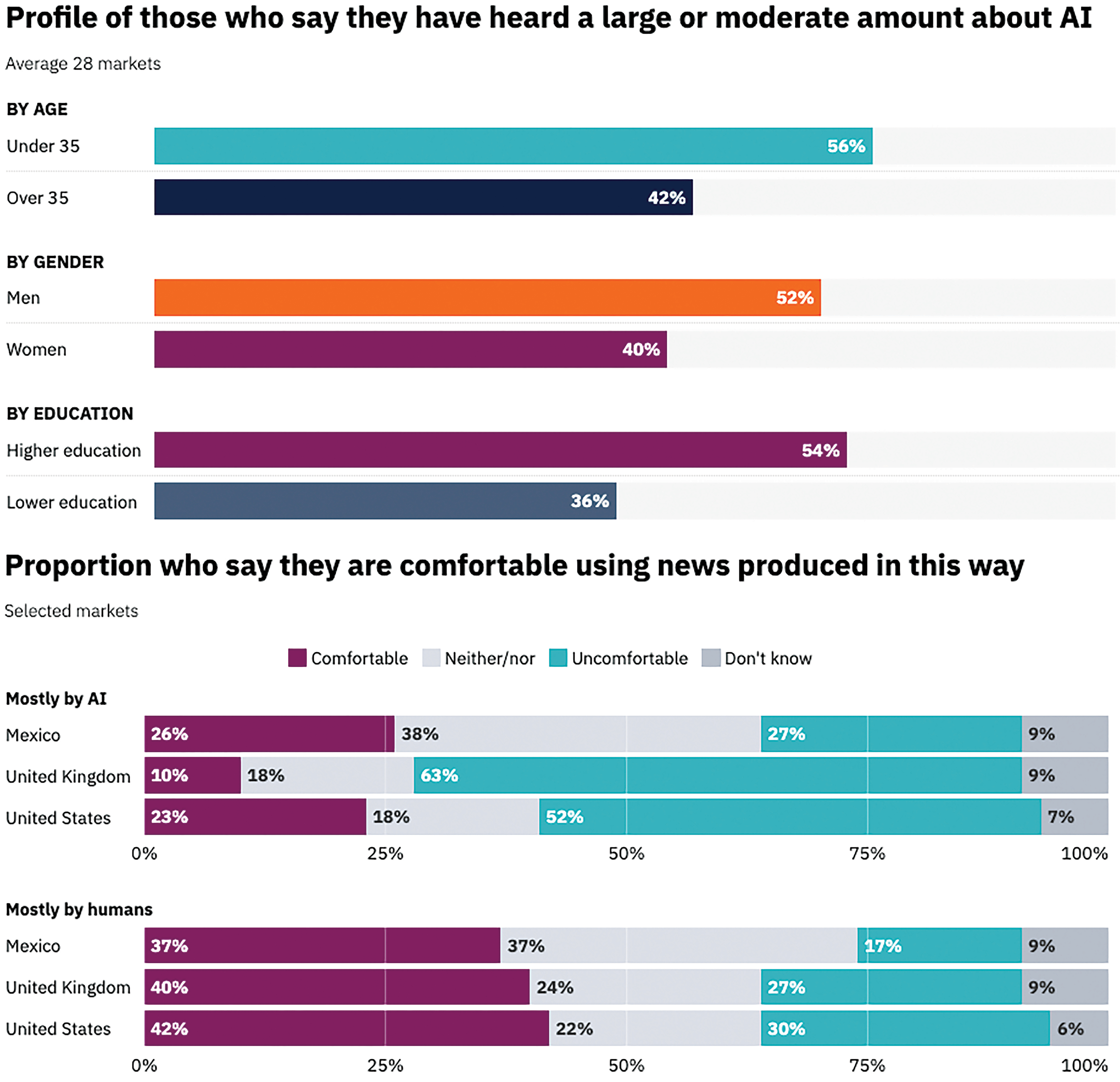

In a 2024 study by the Reuters Institute, researchers found that, in the U.S., fewer than half of people over the age of 35 have heard “a large or moderate amount about AI.”

Three years after ChatGPT’s launch in 2022, that means the majority of our population still doesn’t really know that much about it.

AI is a complicated, double-edged sword of epic proportions. On one hand, it has the potential to do amazing things that could benefit readers and, possibly, humans in general.

For example, some publications are using it as an accessibility tool to read news for those who are vision impaired in a better, more lively way than our typical monotone text readers.

It also can help journalists with the needle-in-a-haystack problem. When data or investigative journalists are working with large volumes of documents, AI can help sift through and flag important things that can lead to more-informed and thorough stories. Some would argue this is its greatest strength: identifying and pointing out patterns.

For large news organizations that have trimmed many of the reporters who used to cover things like school boards and town councils in small communities, they are investigating using AI to summarize government meetings so readers have some idea of what’s going on.

Or, even further, using AI to create entire newscasts using AI-generated news anchors who look and sound like real people. Creepy.

On the negative side, especially with AI inserting itself at the top of your search results, it’s effectively diverting traffic that would go to the news source, in our case, YourObserver.com.

This has two negative outcomes for us and our readers. First, we lose the traffic that otherwise would come to our page, which we monetize by connecting readers with our advertising partners. They are essentially taking information that we spent money and time on to synthesize answers, often poorly and without credit. You may not think that’s a big deal, but the second outcome is that the information provided in AI overviews is often incomplete, misleading and inaccurate.

For example, I Googled, “What are historic property tax rates on Longboat Key, Florida?” The AI Overview from Google responded: “Longboat Key, Florida, located within Sarasota County, offers an ad valorem tax exemption program for property owners making qualifying improvements to properties listed on the Sarasota County Register of Historic Places or similar registers within the county’s municipalities.”

Huh?

Its sources, the links shown to the right of the answer, link to the Sarasota County Libraries, Florida Department of State and How to Find Property Tax Records in Florida on a bogus county lookalike account on YouTube.

OK, it thought I meant taxes for historic properties. Let’s try again, with different phrasing.

This time I received an overview of property taxes and how they work … in Sarasota County. No mention of half of Longboat Key even being in Manatee County.

That’s when I know we will always need human reporters to make sure we see the full picture.

So, how are we at the Observer using AI for our news content?

In short, we aren’t.

About eight months ago, the newsroom met to discuss what we know about AI, how reporters use any AI tools and whether we think they might be useful in the future. Although many of our reporters had played around with ChatGPT, there was 100% consensus, from reporters aged 22 to 67: We don’t trust it, and we’re not going to use it.

I was surprised. I thought for sure someone would see value in it. Help in writing headlines? Using it to rewrite calendar entries? Nope, they said.

This is not because they were scared of losing their jobs. I challenge AI to go cover the county fair, or write a balanced, well-contextualized story on a contentious county commission vote.

They don’t value it because they don’t trust it.

As I’m sure you’ve heard, AI has a tendency to “hallucinate,” meaning it comes up with completely false information while trying to answer a question. Just like a human who is hallucinating might be a little scary and unsettling, we as journalists feel the same way when computers do it.

If we can’t rely on this information, then readers can’t rely on us. That’s a non starter.

The exception to this is transposing software, such as Otter.ai, which helps reporters translate recorded interviews into notes more efficiently. We have also tested some copy-editing tools, but, again, not great. No improvement over the regular spell and grammar checkers, anyway.

Are there potential uses in the future in which we might use, say, a graphic generated by AI to visualize information that we have reported independently and verified? It’s possible.

Will we keep an open mind about new tools that can augment our journalists in their work? Definitely.

Will we be transparent about our efforts? Always.

But for now, we as a newsroom decided being 100% human-powered is a unique thing these days, and as it becomes more rare, it’s only going to make what we do more valuable.

What does this mean for you? Unfortunately, it means now legitimate news is swimming in the same internet information stream that delivers “AI Slop” begging for your attention and spreading misinformation. Think of it as spam, only in your social media and search feed. So, if you care about things that are real, you’re going to have to be more discerning.

We already know this from social media algorithms. Nothing has been more effective at filtering other points of view from your feed. Just “like” one thing leaning one way or the other politically and you will be swooped into a land of agreement and posts that reinforce your view of the world.

The same is true with AI. If you, say, hit “like” on an AI-generated image of Shrimp Jesus just for fun, you may notice that you are soon served up AI-created videos of cats attacking alligators, people saving drowning kids from a flood and all sorts of things that are unbelievable … because they’re not real.

There are people who make money by creating AI images and videos and then monetizing them via websites, social media, YouTube and other online platforms. All fine if you want to entertain yourself that way, but just know those platforms are prioritizing these kinds of content over news.

The more you watch, the more you’ll see.

The way around all of this AI tomfoolery, fake videos and inaccurate content is what it has always been: Select a trusted source of news when you’re reading, and be intentional on what you reward as a consumer.

In the “you vote with your dollars” adage, you also vote with your time, your clicks and your likes. Be judicious with them.

Now more than ever, it is essential to know your source and be mindful of increasing your media literacy. Call us old-fashioned, but we think there’s value to being able to connect with a real human who creates the content you consume. Someone with a byline, a phone, an email ... and a pulse.

At the Observer, that will remain at the heart of the stories and images our journalists create and the type of community we want to foster.

Sorry, Shrimp Jesus.